The CSS selector will match all the link nodes with an ancestor containing the class page-numbers.

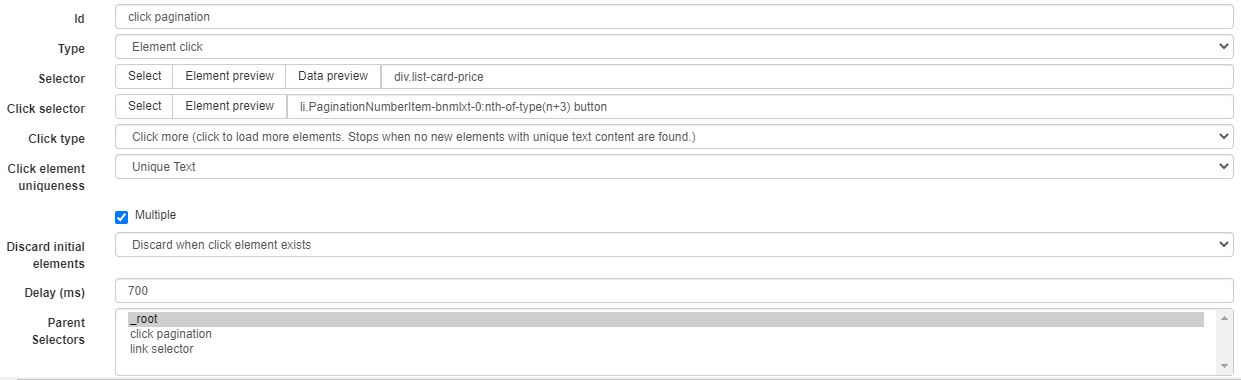

Then extract the URLs ( hrefs) from those. In our case, we only want the pagination links. If we were to write a full-site crawler, that would be the right approach. We could capture all the links on the page and then filter them by content. Pass the selector to the document.querySelector function and check the output. You can execute Javascript on the Console tab and check if the selectors are working correctly. Besides, it will only capture one of the pagination links, not all of them. This approach might be a problem in the future because it will stop working after any minimal change. But the outcome is usually very coupled to the HTML, as in this case: #main > div:nth-child(2) > nav > ul > li:nth-child(2) > a. On the Elements tab, right-click on the node ➡ Copy ➡ Copy selector. You can also use DevTools to get the selector. Check the guide if you are looking for a different outcome or cannot select it. In this case, all the CSS selectors are straightforward and do not need nesting. We marked the interesting parts in red, but you can go on your own and try it yourselves.

All modern web browsers offer developer tools such as these.

#Webscraper click links how to#

We will look at the page with Chrome DevTools open to know how to do that. Nice! Then, we can query the two things we want right now using cheerio: pagination links and products. We'll use scrapeme.live as an example, a fake web page prepared for scraping. We installed Axios for that, and its usage is straightforward. The Javascript web scraping ecosystem is huge! How to web scrape with Javascript? And many more focused on one task, such as table scraper. All of them are widely used and properly maintained.Īpart from these, there are several alternatives to each of them. Is Node JS good for web scraping?Īs you've seen above, tools are available, and the technology is consolidated. It will then parse the content, execute Javascript and wait for the async content to load. Playwright "is a Node.js library to automate Chromium, Firefox and WebKit with a single API." When Axios is not enough, we will get the HTML using a headless browser. Just as we would in a browser environment. We will pass the HTML to cheerio and then query it to extract data. It lets us find DOM nodes using selectors, get text or attributes, and many other things. If you use TypeScript, they include "definitions and a type guard for Axios errors."Ĭheerio is a "fast, flexible & lean implementation of core jQuery" Javascript library. It allows several options, such as headers and proxies, which we will cover later. We are using Node v12, but you can always check the compatibility of each feature.Īxios is a "promise based HTTP client" that we will use to get the HTML from a URL.

0 kommentar(er)

0 kommentar(er)